The discovery of algorithms that enable better and/or new audio-visual user experiences may trigger the development and deployment of highly rewarding solutions. By joining MPEG, companies owning good technologies have the opportunity to make them part of a standard that will help industry develop top performance and interoperable products, services and applications.

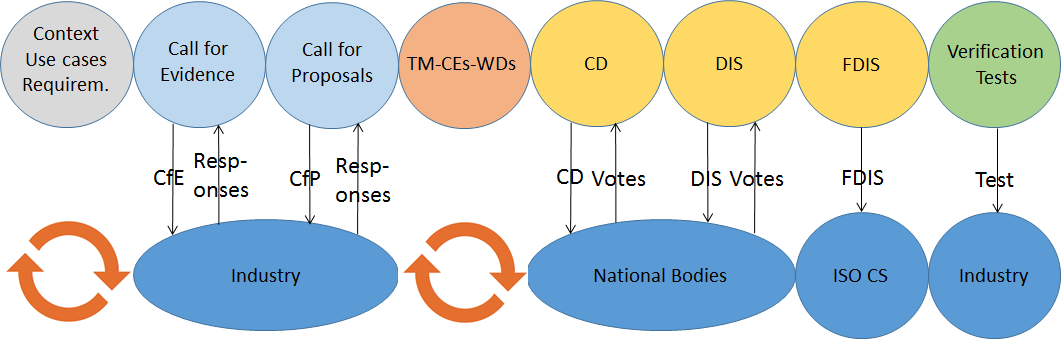

Figure 9 depicts how MPEG has extended the ISO standard development process to suit the need of a field where sophisticated technologies are needed to make viable standards.

Figure 9: The MPEG process to develop standards

Members bring ideas to MPEG for presentation and discussion at MPEG meetings. If the idea is found interesting and promising, an ad hoc group is typically established to explore further the opportunity until the next meeting. The proposer of the idea is typically appointed as chair of the ad hoc group. In this way MPEG offers proposers the opportunity to become the entrepreneurs who can convert an idea into an MPEG standard.

To get to a standard it usually takes some time: the newer the idea, the more time it may take to make it actionable by the MPEG process. After the idea has been clarified, the first step is to understand:

1) the context for which the idea is relevant;

2) the use cases the idea offers advantages for;

3) the requirements that a solution should satisfy to support the use cases.

Even if idea, use context, use cases and requirements have been clarified, it does not mean that technologies necessarily exist out there that can be assembled to provide the needed solution. For this reason, MPEG typically produces and publishes a Call for Evidence (CfE) – sometimes more than one – with attached context, use cases and requirements. The CfE requests companies who think they have technologies satisfying the requirements to demonstrate what they can achieve. At this stage companies are not requested to describe how the results have been achieved, they are only requested to show the results. In many cases respondents are requested to use specific test data to facilitate comparison between different demonstrations. If MPEG does not have those test data, it will request industry (non-necessarily only MPEG members) to provide them.

If the result of the CfE is positive, MPEG will move to the next step and publish a Call for Proposals (CfP), with attached context, use cases, requirements, test data and evaluation method. The CfP requests companies who have technologies satisfying the requirements to submit responses to the CfP where they demonstrate the performance and disclose the exact nature of the technologies that achieve the demonstrated results.

Let’s see how this process has worked out in the specific case of neural network compression.

Table 5 –Example of idea up to Call for Proposals

| Mtg | YY | MM | Actions |

| 120 | 17 | Oct | Presentation of idea of compressing neural networks |

| Approval of CNN in CDVA (document) | |||

| 121 | 18 | Jan | Release of Use cases and requirements |

| 122 | 18 | Apr | New release of Use cases and requirements |

| Release of Call for Test Data | |||

| Approval of Draft Evaluation Framework | |||

| 123 | 18 | Jul | Release of Call for Evidence, Use cases and requirements |

| New release of Call for Test Data | |||

| New version of Draft Evaluation Framework | |||

| 124 | 18 | Oct | Approval of Report on the CfE |

| Release of CfP, Use cases and requirements, Evaluation Framework | |||

| 126 | 19 | Mar | Responses to Call for Proposals |

| Evaluation of responses based on Evaluation Framework | |||

| Definition of Core Experiments |

We can see that Use Cases and Requirements are updated at each meeting and made public so as to get feedback even from people not part of the ISO process, Test data are requested to the industry and the Evaluation Framework is developed well in advance of the CfP. In this particular case it has taken 18 months just to move from the idea of compressing neural networks to CfP responses.

A meeting where CfP submissions are due is typically a big event for the community involved. Knowledgeable people say that such a meeting is often more intellectually rewarding than attending a conference. How could it be otherwise if participants not only can understand and discuss the technologies but also see and judge their actual performance? Everybody feels like being part of an engaging process of building a “new thing”. Read here an independent view.

If MPEG concludes that the technologies submitted and retained are sufficient to start the development of a standard, the work is moved from the Requirements subgroup, which typically handles the process of moving from idea to proposal submission and assessment, to the appropriate technical group. If not, the idea of creating a standard is – maybe temporarily – dropped or further studies are carried out or a new CfP is issued calling for the missing technologies.

If work proceeds, members involved in the discussions need to decide which technologies are useful to build the standard. Results are there but questions pop up from all sides. Those meetings are good examples of the “survival of the fittest”, a principle that applies to living beings as well as to technologies.

Eventually the group identifies useful technologies from the proposals and builds an initial Test Model (TM) of the solution. This is the starting point of a cyclic process where MPEG experts do the following

- Identify critical points of the TM;

- Define which experiments – called Core Experiments (CE) – should be carried out to improve TM performance;

- Review members’ submissions;

- Review CE technologies and adopt those bringing sufficient improvements.

At the right time (which may very well be the meeting where the proposals are reviewed or several meetings after), the group produces a Working Draft (WD). The WD is continually improved following the 4 steps above.

The start of a new standard is typically not without impact on the MPEG ecosystem. Members may wake up to the need to support new requirements or they realise that specific applications may require one or more “vehicles” to embed the technology in those application or they may conclude that the originally conceived solution needs to be split in more than one standard.

These and other events are handled by convening joint meetings between the group developing the technology and technical “stakeholders” in other groups.

Eventually MPEG is ready to “go public” with a document called Committee Draft (CD). However, this only means that the solution is submitted to the national standard organisations – National Bodies (NB) – for consideration. NB experts vote on the CD with comments. If a sufficient number of positive votes are received (this is what has happened for all MPEG CDs so far), MPEG assesses the comments received and decides on accepting or rejecting them one by one. The result is a new version of the specification – called Draft International Standard (DIS) – that is also sent to NBs where it is assessed again by national experts, who vote and comment on it. MPEG reviews NB comments for the second time and produces the Final Draft International Standard. This, after some internal processing by ISO, is eventually published as an International Standard.

MPEG typically deals with complex technologies that companies consider “hot” because they are urgently needed for their products. As much as in companies the development of a product goes through different phases (alpha/beta releases etc., in addition to internal releases), achieving a stable specification requires many reviews. Because CD/DIS ballots may take time, experts may come to a meeting reporting bugs found or proposing improvements to the document out for ballot. To take advantage of this additional information, that the group scrutinises for its merit, MPEG has introduced an unofficial “mezzanine” status of the document called “Study on CD/DIS” where proposals for bug fixes and improvements are added to the document under ballot. These “Studies on CD/DIS” are communicated to the NBs to facilitate their votes on the official documents under ballot.

Let’s see in the table below how this part of the process has worked for the Point Cloud Compression (PCC) case. Only the most relevant documents have been retained.

Table 6 – An example from CfP to FDIS

| Mtg | YY | MM | Actions – Approval of |

| 120 | 17 | Oct | Report on PCC CfP responses |

| 7 CEs related documents | |||

| 121 | 18 | Jan | 3 WDs |

| PCC requirements | |||

| 12 CEs related documents | |||

| 122 | 18 | Apr | 2 WDs |

| 22 CEs related documents | |||

| 123 | 18 | Jul | 2 WDs |

| 27 CEs related documents | |||

| 124 | 18 | Oct | V-PCC CD |

| G-PCC WD | |||

| 19 CEs related documents | |||

| Storage of V-PCC in ISOBMFF files | |||

| 128 | 19 | Oct | V-PCC FDIS |

| 129 | 20 | Jan | G-PCC FDIS |

I would like to draw your attention to “Approval of 27 CEs related documents” in the July 2018 row. The definition of each of these CE documents requires lengthy discussions by involved experts because they describe the experiments that will be carried out by different parties at different locations and how the results will be compared for a possible decision. It should not be a surprise if some experts work from Thursday until Friday morning to get documents approved by the Friday plenary.

I would also like to draw your attention to “Approval of Storage of V-PCC in ISOBMFF files” in the October 2018 row. This is the start of a Systems standards that will enable the use of PCC in specific applications, as opposed to being just a “data compression” specification.

The PCC work item is currently seeing the involvement of some 80 experts. If you think that there were more than 500 experts at the October 2018 meeting, you should have a pretty good idea of the complexity of the MPEG machine and the amount of energies poured in by MPEG members.

In most cases MPEG performs “Verification Tests” on a standard produced to provide the industry with precise indications of the performance that can be expected from an MPEG compression standard. To do this the following is needed: specification of tests, collection of appropriate test material, execution of reference or proprietary software, execution of subjective tests, test analysis and reporting.

Very often, as a standard takes shape, new requirements for new functionalities are added. They become part of a standard either through the vehicle of “Amendments”, i.e. separate documents that specify how the new technologies can be added to the base standard, or “New Editions” where the technical content of the Amendment is directly integrated into the standard in a new document.

As a rule, MPEG develops reference software for its standards. The reference software has the same normative value as the standard expressed in human language. Neither prevails on the other. If an inconsistency is detected, one is aligned to the other.

MPEG also develops conformance tests, supported by test suites, to enable a manufacturer to judge whether its implementation of 1) an encoder produces correct data by feeding them into the reference decoder or 2) a decoder is capable to correctly decode the test suites.

Finally, it may happen that bugs are discovered in a published standard. This is an event to be managed with great attention because industry may already have released implementations on the market.

MPEG is definitely a complex machine because it needs to assess if an idea is useful in the real world, understand if there are technologies that can be used to make a standard supporting that idea, get the technologies, integrate them, develop the standard and test the goodness of the standard for the intended purpose. Often it also has to provide integrated audio-visual solutions where a line-up of standards nicely fit to provide a system specification.

MPEG needs to work like a company developing products, but it is not a company. Fortunately, one can say, but also unfortunately because it has to operate under strict rules that apply to thousands of ISO committees, working for very different industries with very different mindsets.

| Table of contents | ◄ | 5.2 Organisation of work | █ | 5.4 The ecosystem drives MPEG standards | ► |