Introduction

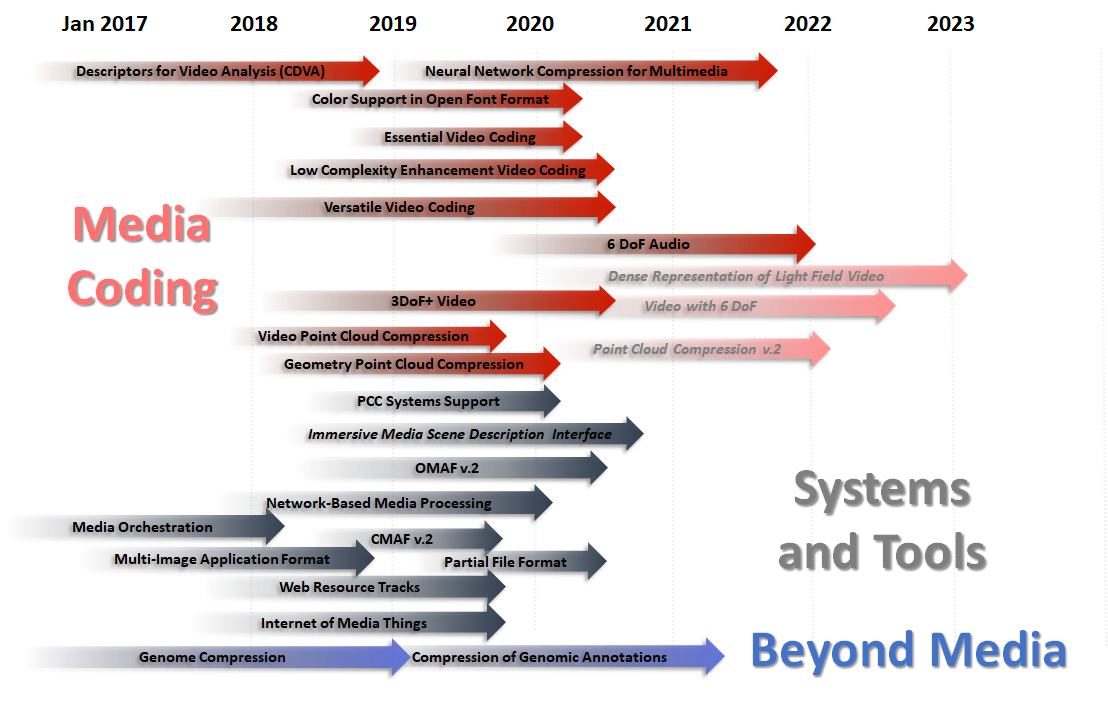

The MPEG success describes how MPEG standards thave been (and often continue to be after many years) extremely successful. As it is difficult to single out those that will be successful in the future 😊, the reasonable thing to do is to show the entire MPEG work plan.

Figure 15: The MPEG work plan (March 2019)

At the risk of making the wrong bet 😊, the following will introduce some of the most high-profile standards under development, subdivided in the three categories: Media Coding, Systems and Tools, and Beyond Media. If you are interested in MPEG standards, however, you have better to become acquainted with all ongoing activities. In MPEG sometimes the last become the first.

Media Coding

- Versatile Video Coding (VVC): is the flagship video compression activity that will deliver another round of improved video compression. It is expected to be the platform on which MPEG will build new technologies for immersive visual experiences (see below).

- Enhanced Video Coding (EVC): is the shorter-term project with less ambitious goals than VVC’s. EVC is designed to satisfy urgent needs from those who need a standard with a hopefully simplified IP landscape.

- Immersive visual technologies: investigates technologies applicable to visual information captured by different camera arrangements, as described in Immersive visual experiences.

- Point Cloud Compression (PCC): refers to two standards capable of compressing 3D point clouds captured with multiple cameras and depth sensors. The algorithms in both standards are lossy, scalable, progressive and support random access to point cloud subsets. See Immersive visual experiences for more details.

- Immersive audio: MPEG-H 3D Audio supports a 3 Degrees of Freedom or 3DoF (yaw, pitch, roll) experience at the movie “sweet spot”. More complete user experiences, however, are needed, i.e. 6 DoF (adding x, y, z). These can be achieved with additional metadata and rendering technology.

Systems and Tools

- Omnidirectional media format (OMAF): is a format supporting the interoperable exchange of omnidirectional (Video 360) content. In v1 for users can only Yaw, Pitch and Roll their head, but v2 will support limited head translation movements. More details in Immersive visual experiences.

- Storage of PCC data in MP4 FF: MPEG is developing systems support to enable storage and transport of compressed point clouds with DASH, MMT etc.

- Scene Description Interface: MPEG is investigating the interface to the scene description (not the technology) to enable rich immersive experiences.

- Service interface for immersive media: Network-based Media Processing will enable a user to access potentially sophisticated processing functionality made available by a network service via standard API.

- IoT when Things are Media Things: Internet of Media Things (IoMT) will enable the creation of networks of intelligent Media Things (i.e. sensors and actuators)

Beyond Media

- Standards for biotechnology applications: MPEG is finalising all 5 parts of the MPEG-G standard and establishing new liaisons to investigate new opportunities.

- Coping with neural networks everywhere: shortly (25 March 2019) MPEG is developing Compression of neural networks for multimedia content description and analysis (part 17 of MPEG-7) after receiving responses to its Call for Proposals for Neural Network Compression (see Moving intelligence around).

| Table of contents | ◄ | 6.2 The MPEG success in numbers (of dollars) | █ | 7 Planning for the future of MPEG | ► |